AI such as ChatGPT or Midjourney are already fundamentally changing the work of designers. How do skills, mindsets and processes change in practice when artificial intelligence becomes a tool and material?

Can machines truly be creative? In the age of artificial intelligence, this question no longer seems purely philosophical. Practically speaking, it has already been answered. The new generation of AI, known as generative AI, can generate texts, images, videos, and code with just a few lines of input. For many designers, this is the first time the phenomenon has captured their attention. Some are excited, others curious or intimidated – or all three at once. What follows is often a significant need for clarification on what AI means for design, how design practices are changing, and where this journey will ultimately lead.

Understanding AI’s Impact on Design

AI can learn any statistically relevant relationship between any type of data. For example, it can understand the relationship between text and image, text and music, or between images and user preferences. The critical aspect is that AI can generalise the learned relationship, applying it to unknown data. Simplified, this means it can predict data with high statistical probability – for instance, which image a user will like or how an image should look based on a given text.

Two aspects are crucial to understanding AI’s impact on design: automation and augmentation. AI can automate tasks that are repetitive and relatively simple to break down into individual steps. Experts believe that AI will replace humans and lead to job losses. In design, this will likely affect specific repetitive tasks that are time-consuming, such as rotoscoping or character animation.

In addition to automation, there is augmentation: AI can extend designers’ capabilities, giving them skills they previously did not have or could only acquire with significant professional experience. This type of human-machine co-creation leads to entirely new ways of working in design. For instance, tools like Eyequant or Maze can illustrate which areas of a website attract the most attention from visitors. The AI has learned the relationship between design and attention and creates a heatmap showing the most focused areas of the site.

This is immensely helpful because such complex information is often difficult for designers to grasp, especially for different user personas. The AI not only assists but also enhances the cognitive abilities of designers. This makes it possible to create solutions that were previously unimaginable.

Democratising Design Practice

This new form of collaboration between intelligent machines and designers also means that design practice becomes more democratic, and professional boundaries become more fluid. Now, people without a design education can engage in creative work, such as marketers creating images for their campaigns. Conversely, designers can now generate copy that previously had to be written by copywriters. Nevertheless, trained designers are still needed to evaluate and curate the quality and strategic fit of design solutions.

AI for Design and Design for AI

Looking at AI’s application areas, two major fields emerge. First, AI is used in the design process, where AI becomes a design tool. This primarily involves generative AI, with main representatives like ChatGPT and image generators like Midjourney, Stable Diffusion, and DALL-E. Less known methods, such as Neural Radiance Fields used for photogrammetry to turn photos into 3D models, also fall into this category. Designers can find extensive lists of AI tools for various design fields online.

Recently, image generators produced only pixelated images with often a single object. Now, designers have the freedom of intuitive text-based image generation with the new generation of diffusion models. For example, Alibaba’s Composer model dissects an image into compositional elements that can be individually manipulated. This not only transforms the image into a sketch but also segments and masks it according to its content, creating depth of field, recognizing the semantic content, identifying the intensity of the depiction, its color palette, and linking it to the image description.

New Degrees of Creative Freedom

Designers can now move freely between all these areas. A text can generate a portrait of a girl, whose face can then be replaced with that of a cat. This face can be altered in intensity and colour or its silhouette transformed into a landscape. This technology provides new degrees of creative freedom that were previously achievable only with significant practice and imagination. Practically, brand consistency can be maintained by adjusting the colour palette or styles.

The second field involves using design to enhance the user experience of AI-based products. Here, AI becomes design material. For designers who previously worked on non-intelligent products, the challenge is to design for a machine that makes mistakes. Traditional screen flows with clear processes must be expanded to design for a confusion matrix. The confusion matrix is a table used to determine the results of a classification algorithm. Issues such as error tolerance, trust, transparency, and ethics become relevant. Additionally, the question arises of how to translate user needs into data needs. This is new for most designers, who often need to collaborate in interdisciplinary teams with data scientists and machine learning engineers.

Floom: Facilitating the Design Process

The interactive visual framework Floom can ease the transition into this way of working. Based on Figma, it serves as a guide for innovating AI-based products, helping to navigate the innovation process faster and more informed.

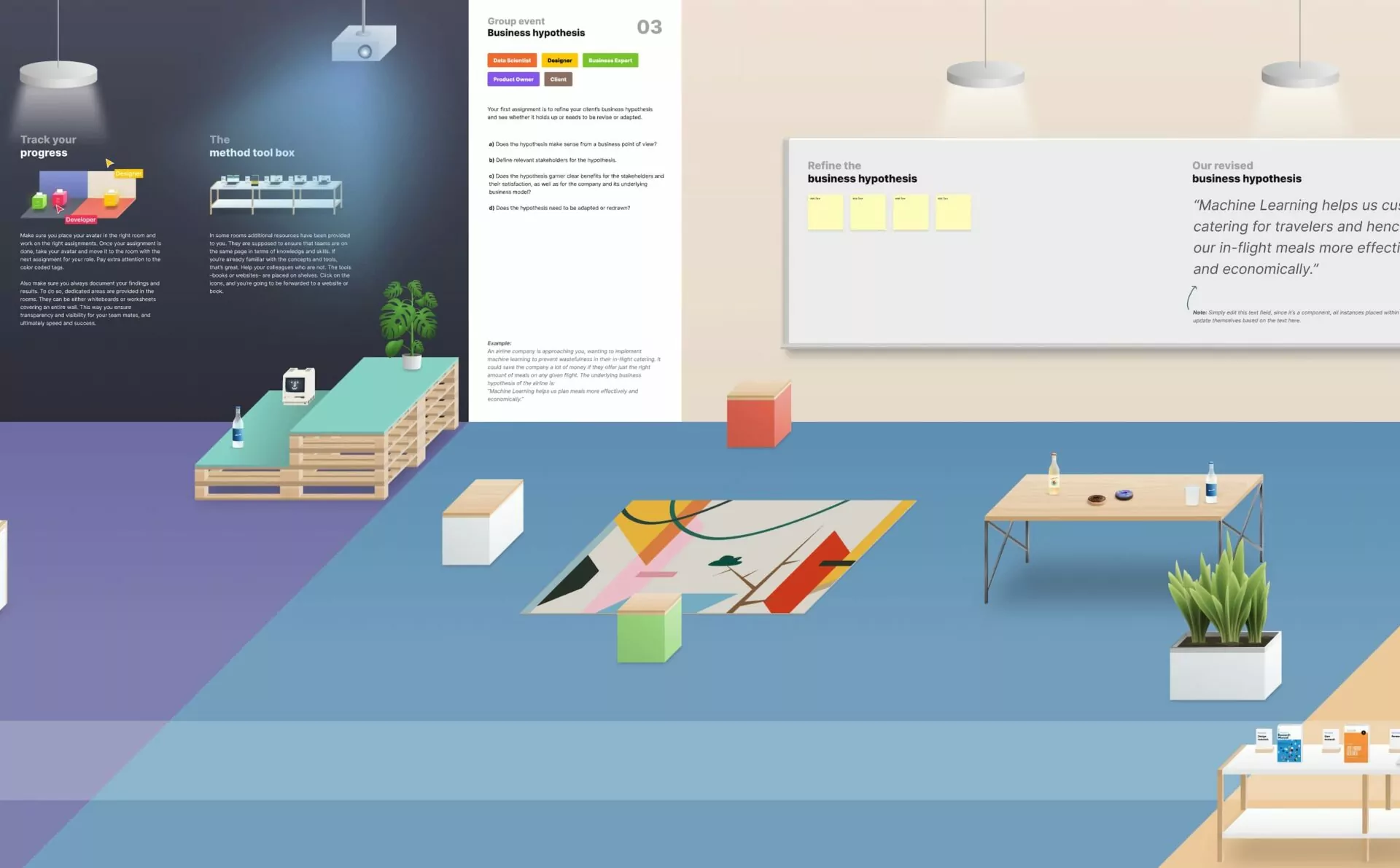

Floom is divided into rooms, each with specific tasks that need to be completed based on roles (see figure 1). Designers receive assistance through worksheets to create an intelligence experience, develop an MVP, and test prototypes. They also get support in understanding AI processes and the confusion matrix.

At the end, the team has created and launched a fully functional MVP. The framework is free and can be tailored to the teams’ preferences and needs.

Fig.1 : In Floom, designers can organise tasks according to their roles in different rooms.

Fig.1 : In Floom, designers can organise tasks according to their roles in different rooms. What’s Next?

AI is developing at a rapid pace. In May 2024, OpenAI and Google released multimodal versions of ChatGPT and Gemini that combine language, text, voice and image into a coherent interaction. Theoretically, designers can now use the tool for iterative support in the design process.

New methods like Poisson Flow Generative Models and Consistency Models are also being developed to create images instantaneously. This could mean that images are generated every time websites are reloaded, simply by embedding a prompt. Design becomes highly personalised and is created at the moment of use.

However, the biggest and most complex questions surrounding generative AI remain unresolved: these are ethical and legal issues. In September 2023, authors John Grisham and George R. R. Martin announced their participation in a copyright lawsuit against OpenAI. Other lawsuits are pending against Stability AI and the tool Stable Diffusion. The question of which training data these tools are allowed to use and what constitutes fair compensation for involuntarily provided training data has serious consequences.

It’s clear that text and image generators pose a real threat to the livelihoods of many creatives. Whether existing copyrights are enforceable remains an open question, likely to be decided by the courts in the USA and the EU. The outcome of these legal and ethical disputes will ultimately shape the future of creative machines and their users.

Links

- Extensive lists of AI tools: designundki.de